A prodrome is an early stage of a condition that might have different symptoms than the full-blown version. In psychiatry, the prodrome of schizophrenia is the few-months-to-few-years period when a person is just starting to develop schizophrenia and is acting a little bit strange while still having some insight into their condition.

There’s a big push to treat schizophrenia prodrome as a critical period for intervention. Multiple studies have suggested that even though schizophrenia itself is a permanent condition which can be controlled but never cured, treating the prodrome aggressively enough can prevent full schizophrenia from ever developing at all. Advocates of this view compare it to detecting early-stage cancers, or getting prompt treatment for a developing stroke, or any of the million other examples from medicine of how you can get much better results by catching a disease very early before it has time to do damage.

These models conceptualize psychosis as “toxic” – not just unpleasant in and of itself, but damaging the brain while it’s happening. They focus on a statistic called Duration of Untreated Psychosis. The longer the DUP, the more chance psychosis has had to damage the patient before the fire gets put out and further damage is prevented. Under this model it’s vitally important to put people who seem to be getting a little bit schizophrenic on medications as soon as possible.

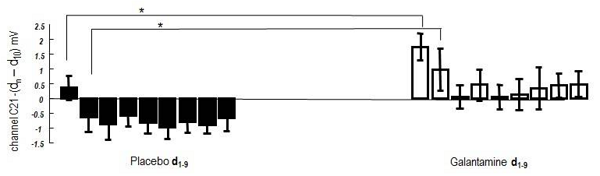

There has been a lot of work on this theory, but not a lot of light has been shed. Observational studies testing whether duration of untreated psychosis correlates with poor outcome mostly find it does a little bit, but there’s a lot of potential confounding – maybe lower-class uneducated people take longer to see a psychiatrist, or maybe people who are especially psychotic are especially bad at recognizing they are psychotic. The relevant studies try their hardest to control for these factors, but remember that this is harder than you think. The randomized controlled trials of what happens if you intervene earlier in psychosis tend to do very badly and rarely show any benefit, but randomly intervening earlier in psychosis is hard, especially if you also need an ethics board’s permission to keep a control group of other people who you are not going to intervene early on. Overall I could go either way on this.

Previously I was leaning toward “probably not relevant”, just because it’s too convenient. There is a lot of debate about how aggressively to treat schizophrenia, with mainstream psychiatry (and their friends the pharma companies) coming down on the side of “more aggressively”, while other people point out that antipsychotics have lots of side effects and their long-term effects (both how well they work long-term, and what negative effects they have long-term) are poorly understood. These people tend to come up with kind of wild theories about how long-term antipsychotics hypersensitize you and make you worse. I don’t currently find these very credible, but I’m also skeptical of things that are too convenient to the mainstream narrative, like “unless you treat every case of schizophrenia right away you are exposing patients to toxicity, and every second you fail to give the drugs makes them irreversibly worse forever!” And I know a bunch of people whose level of psychosis hovers at “mild” and has continued to do so for decades without the lack of treatment making it much worse.

After learning more about the biology of schizophrenia, I’ve become more willing to credit the DUP model. I can’t give great sources for this, because I’ve lost some of them, but this Friston paper, this Fletcher & Frith paper, and Surfing Uncertainty all kind of point to the same model of why untreated schizophrenia might get worse with time.

In their system, schizophrenia starts with aberrant prediction errors; the brain becomes incorrectly surprised by some sense-data. Maybe a fly buzzes by, and all of a sudden the brain shouts “WHOA! I WASN’T EXPECTING THAT! THAT CHANGES EVERYTHING!” Your brain shifts its resources to coming up with a theory of the world that explains why that fly buzzing by is so important – or perhaps which maximizes its ability to explain that particular fly at the cost of everything else.

Talk to early-stage schizophrenics, and their narrative sounds a lot like this. They’ll say something like “A fly buzzed by, and I knew somehow it was very significant. It must be a sign from God. Maybe that I should fly away from my current life.” Then you’ll tell them that’s dumb, and they’ll blink and say “Yeah, I guess it is kind of dumb, now that you mention it” and continue living a somewhat normal life.

Or they’ll say “I was wondering if I should go to the store, and then a Nike ad came on that said JUST DO IT. I knew that was somehow significant to my situation, so I figured Nike must be reading my mind and sending me messages to the TV.” Then you’ll remind them that that can’t happen, and even though it seemed so interesting that Nike sent the ad at that exact moment, they’ll back down.

But even sane people change their beliefs more in response to more evidence. If a friend stepped on my foot, I would think nothing of it. If she did it twice, I might be a little concerned. If she did it fifty times, I would have to reevaluate my belief that she was my friend. Each piece of evidence chips away at my comfortable normal belief that people don’t deliberately step on my feet – and eventually, I shift.

The same process happens as schizophrenia continues. One fly buzzing by with cosmic significance can perhaps be dismissed. But suppose the next day, a raindrop lands on your head, and there’s another aberrant prediction error burst. Was the raindrop a sign from God? The evidence against is that this is still dumb; the evidence for is that you had both the fly and the raindrop, so your theory that God is sending you signs starts looking a little stronger. I’m not talking about this on the conscious level, where the obvious conclusion is “guess I have schizophrenia”. I’m talking about the pre-conscious inferential machinery, which does its own mechanical thing and tells the conscious mind what to think.

As schizophrenics encounter more and more strange things, they (rationally) alter their high-level beliefs further and further. They start believing that God often sends signs to people. They start believing that the TV often talks especially to them. They start believing that there is a conspiracy. The more aberrant events they’re forced to explain, the more they abandon their sane views about the world (which are doing a terrible job of predicting all the strange things happening to them) and adopt psychotic ones.

But since their new worldview (God often sends signs) gives a high prior on various events being signs from God, they’ll be more willing to interpret even minor coincidences as signs, and so end up in a nasty feedback loop. From the Frith and Fletcher paper:

Ultimately, someone with schizophrenia will need to develop a set of beliefs that must account for a great deal of strange and sometimes contradictory data. Very commonly they come to believe that they are being persecuted: delusions of persecution are one of the most striking and common of the positive symptoms of schizophrenia, and the cause of a great deal of suffering. If one imagines trying to make some sense of a world that has become strange and inconsistent, pregnant with sinister meaning and messages, the sensible conclusion might well be that one is being deliberately deceived. This belief might also require certain other changes in the patient’s view of the world. They may have to abandon a succession of models and even whole classes of models.

A few paragraphs later, they expand their theory to the negative symptoms of schizophrenia. That is: advanced-stage schizophrenics tend to end up in a depressed-like state where they rarely do anything or care about anything. The authors say:

Further, although we have deliberately ignored negative symptoms, it is interesting to consider whether this model might have relevance for this extremely incapacitating feature of schizophrenia. We speculate that this deficit could indeed be ultimately responsible for the amotivational, asocial, akinetic state that is characteristic of negative symptoms. After all, a world in which sensory data are noisy and unreliable might lead to a state in which decisions are difficult and actions seem fruitless. We can only speculate on whether the same fundamental deficit could account for both positive and negative features of schizophrenia but, if it could, we suggest that it would be more profound in the case of negative features, and this increased severity might be invoked to account for the strange motor disturbances (collectively known as catatonia) that can be such a striking feature of the negative syndrome.

I think what they are saying is that, as the world becomes even more random and confusing, the brain very slowly adjusts its highest level parameters. It concludes, on a level much deeper than consciousness, that the world does not make sense, that it’s not really useful to act because it’s impossible to predict the consequences of actions, and that it’s not worth drawing on prior knowledge because anything could happen at any time. It gets a sort of learned helplessness about cognition, where since it never works it’s not even worth trying. The onslaught of random evidence slowly twists the highest-level beliefs into whatever form best explains random evidence (usually: that there’s a conspiracy to do random things), and twists the fundamental parameters into a form where they expect evidence to be mostly random and aren’t going to really care about it one way or the other.

Antipsychotics treat the positive symptoms of schizophrenia – the hallucinations and delusions – pretty well. But they don’t treat the negative symptoms much at all (except, of course, clozapine). Plausibly, their antidopaminergic effect prevents the spikes of aberrant prediction error, so that the onslaught of weird coincidences stops and things only seem about as relevant as they really are.

But if your brain has already spent years twisting itself into a shape determined by random coincidences, antipsychotics aren’t going to do anything for that. It’s not even obvious that a few years of evidence working normally will twist it back; if your brain has adopted the hyperprior of “evidence never works, stop trying to respond to it”, it’s hard to see how evidence could convince it otherwise.

This theory fits the “duration of untreated psychosis” model very well. The longer you’re psychotic, with weird prediction errors popping up everywhere, the more thoroughly your brain is going to shift from its normal mode of evidence-processing to whatever mode of evidence-processing best suits receiving lots of random data. If you start antipsychotics as soon as the prediction errors start, you’ll have a few weird thoughts about how a buzzing fly might have been a sign from God, but then the weirdness will stop and you’ll end up okay. If you start antipsychotics after ten years of this kind of stuff, your brain will already have concluded that the world only makes sense in the context of a magic-wielding conspiracy plus also normal logic doesn’t work, and the sudden cessation of new weirdness won’t change that.

The Frith and Fletcher paper also tipped me off to this excellent first-person account by former-schizophrenic-turned psychologist Peter Chadwick:

At this time, a powerful idea of reference also overcame me from a television episode of Colombo and impulsively I decided to write letters to friends and colleagues about “this terrible persecution.” It was a deadly mistake. After a few replies of the “we’ve not heard anything” variety, my subsequent (increasingly overwrought) letters, all of them long, were not answered. But nothing stimulates paranoia better than no feedback, and once you have conceived a delusion, something is bound to happen to confirm it. When phrases from the radio echoed phrases I had used in those very letters, it was “obvious” that the communications had been passed on to radio and then television personnel with the intent of influencing and mocking me. After all betrayal was what I was used to, why should not it be carrying on now? It seemed sensible. So much for my bonding with society. It was totally gone. I was alone and now trusted no one (if indeed my capacity to trust people [particularly after school] had ever been very high).

The unfortunate tirade of coincidences that shifted my mentality from sane to totally insane has been described more fully in a previous offering. From a meaningless life, a relationship with the world was reconstructed by me that was spectacularly meaningful and portentous even if it was horrific. Two typical days from this episode I have recalled as best I could and also published previously. The whole experience was so bizarre it is as if imprinted in my psyche in what could be called “floodlit memory” fashion. Out of the coincidences picked up on, on radio and television, coupled with overheard snatches of conversation in the street, it was “clear” to me that the media torment, orchestrated as inferred at the time by what I came to call “The Organization,” had one simple message: “Change or die!” Tellingly my mother (by then deceased) had had a fairly similar attitude. It even crossed my (increasingly loosely associated) mind that she had had some hand in all this from beyond the grave […]

As my delusional system expanded and elaborated, it was as if I was not “thinking the delusion,” the delusion was “thinking me!” I was totally enslaved by the belief system. Almost anything at all happening around me seemed at least “relevant” and became, as Piaget would say, “assimilated” to it. Another way of putting things was that confirmation bias was massively amplified, everything confirmed and fitted the delusion, nothing discredited it. Indeed, the very capacity to notice and think of refutatory data and ideas was completely gone. Confirmation bias was as if “galloping,” and I could not stop it.

As coincidences jogged and jolted me in this passive, vehicular state into the “realization” that my death was imminent, it was time to listen out for how the suicide act should be committed. “He has to do it by bus then?!” a man coincidentally shouted to another man in the office where I had taken an accounts job (in fact about a delivery but “of course” I knew that was just a cover story). “Yes!” came back the reply. This was indeed how my life was to end because the remark was made as if in reply to the very thoughts I was having at that moment. Obviously, The Organization knew my very thoughts.

Two days later, I threw myself under the wheels of a double decker, London bus on “New King’s Road” in Fulham, West London, to where I had just moved. In trying to explain “why all this was happening” my delusional system had taken a religious turn. The religious element, that all this torment was willed not only by my mother and transvestophobic scandal-mongerers but by God Himself for my “perverted Satanic ways,” was realized in the personal symbolism of this suicide. New King’s Road obviously was “the road of the New King” (Jesus), and my suicide would thrust “the old king” (Satan) out of me and Jesus would return to the world to rule. I then would be cast into Outer Darkness fighting Satan all the way. The monumental, world-saving grandiosity of this lamentable act was a far cry from my totally irrelevant, penniless, and peripheral existence in Hackney a few months before. In my own bizarre way, I obviously had moved up in the world. Now, I was not an outcast from it. I was saving the world in a very lofty manner. Medical authorities at Charing Cross Hospital in London where I was taken by ambulance, initially, of course, to orthopedics, fairly quickly recognized my psychotic state. Antipsychotic drugs were injected by a nurse on doctors’ advice, and eventually, I made a full physical and mental recovery.

Chadwick never got too far along; he had all the weird coincidences, he was starting to get beliefs that explained them, but he never got to a point where he shifted his fundamental concepts or beliefs about logic in an irreversible way. As far as I know he’s been on antipsychotics consistently since then, and has escaped with no worse consequences than becoming a psychology professor. I am not sure whether things would have gone worse for him without the medications, but I think it’s a possibility we have to consider.